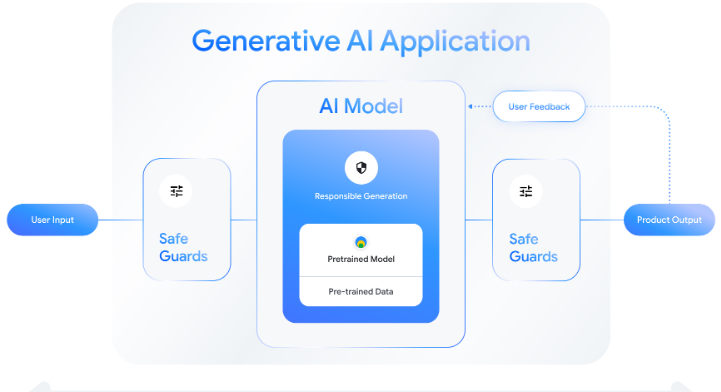

Generative AI models like Gemma hold immense potential, but with great power comes great responsibility. This blog post dives into the Responsible Generative AI Toolkit, your guide to harnessing Gemma’s capabilities while ensuring ethical and safe outcomes.

Why be responsible?

Imagine a world where AI generates biased content, exposes sensitive information, or even creates harmful outputs. It’s a future we must avoid. The toolkit empowers you to build applications with Gemma that are:

- Safe: Mitigate risks like generating harmful text, promoting misinformation, or perpetuating bias.

- Private: Protect sensitive information and ensure data privacy throughout the development process.

- Fair: Avoid discriminatory outputs and promote inclusiveness in your applications.

- Accountable: Understand how your AI works and be responsible for its outcomes.

What’s inside the toolkit?

The toolkit provides a comprehensive set of resources to help you achieve these goals:

- Safety best practices: Learn about setting safety policies, tuning parameters for safety, using safety classifiers, and evaluating models for potential risks.

- LIT (Learning Interpretability Tool): This tool helps you understand how Gemma works and identify potential issues before they arise.

- Building robust safety classifiers: Learn a methodology to create effective safety measures with minimal data.

- Holistic approach to responsibility: The toolkit goes beyond technical aspects, guiding you to consider the application-level implications of your AI.

Remember: Responsibility is a journey, not a destination. The toolkit provides a starting point, but your commitment to ethical AI development is crucial.

Ready to explore Generative AI?

Visit the Responsible Generative AI Toolkit and start building responsible applications with Gemma. Remember, the future of AI depends on our collective efforts to ensure it benefits all.

Also Subscribe to our newsletter to get our newest articles instantly!

@The Techmarketer